HARIA - Human-Robot Sensorimotor Augmentation

Wearable Sensorimotor Interfaces and Supernumerary Robotic Limbs for Humans with Upper-Limb Disabilities

- Ansprechperson:

- Förderung:

EU HORIZON-CL4-2021 Projekt

- Starttermin:

2022

- Endtermin:

2026

Das Ziel von HARIA ist die Grundlagen für ein neues Forschungsfeld zu legen: die sensomotorische Augmentation des Menschen. Um dieses Ziel zu erreichen werden KI-gestützte Technologien in Form von tragbaren Gliedmaßen und sensomotorische Schnittstellen entwickelt, die es Nutzenden ermöglichen, die zusätzlichen Gliedmaßen direkt zu steuern und zu fühlen, indem sie die Redundanz des menschlichen sensomotorischen Systems nutzen. Zielgruppe sind Personen mit ein- oder beidseitigen chronischen motorischen Behinderungen der oberen Extremität um ihre Lebensqualität zu verbessern.

H²T wird ein KI-gestütztes Rahmenwerk entwickeln, das auf Affordanzen basierende Szenenrepräsentationen, benutzerzentrierte und kontextsensitive Adaption von Roboterfähigkeiten und die Rollenzuweisung in Mensch-Roboter-Manipulationsaufgaben mit unterschiedlicher Komplexität (unimanuelle Manipulation, bimanuelle Mensch-Roboter Ko-Manipulation, und bimanuelle Robotermanipulation) integriert.

euROBIN - European ROBotics and AI Network

- Ansprechperson:

- Förderung:

EU H2020 Projekt

- Starttermin:

2022

- Endtermin:

2026

euROBIN ist das Exzellenznetzwerk, das europäische Expertise über Robotik und KI bündelt. Es schafft eine einheitliche paneuropäische Plattform für Forschung und Entwicklung. Zum ersten Mal forscht eine große Anzahl von renommierten Forschungslabors in ganz Europa gemeinsam an KI-basierter Robotik. Zu den Zielen gehören sowohl bedeutende wissenschaftliche Fortschritte bei Kernfragen der KI-basierten Robotik als auch die Stärkung der wissenschaftlichen Robotik-Gemeinschaft in Europa durch die Bereitstellung einer integrativen Gemeinschaftsplattform. Das Netzwerk steht der gesamten Robotik-Gemeinschaft offen und bietet Mechanismen zur Kaskadenfinanzierung, um die Zahl der Mitglieder in den nächsten Jahren zu verdoppeln.

euROBIN umfasst 31 Partner aus 14 Ländern. Es wird vom Institut für Robotik und Mechatronik des Deutschen Zentrums für Luft- und Raumfahrt koordiniert und umfasst sowohl hochkarätige Forschungseinrichtungen als auch herausragende Industriepartner aus verschiedenen Branchen. Das Netzwerk wurde von der EU und der Schweiz mit insgesamt 12,5 Millionen Euro gefördert und startete am 1. Juli 2022.

H²T leistet einen Beitrag zum Anwendungsbereich „persönliche Robotik“ und zum Wissenschaftsbereich „Roboterlernen“ mit dem Ziel, humanoide Assistenzroboter mit fortgeschrittenen Fähigkeiten zu entwickeln, die Aufgaben in menschenzentrierten Umgebungen ausführen und dabei vom Menschen und der Interaktion mit der Welt lernen, mit besonderem Fokus auf die Übertragbarkeit von Fähigkeiten auf verschiedene Roboter.

Reallabor robotische KI

- Ansprechperson:

- Förderung:

Ministerium für Wissenschaft, Forschung und Kunst Baden-Württemberg im Rahmen der Digitalisierungsstrategie des Landes "digital@bw"

- Starttermin:

2021

- Endtermin:

2023

Im KIT-Reallabor "Robotische Künstliche Intelligenz" treffen humanoide Roboter als verkörperte Künstliche Intelligenz (KI) ihre möglichen zukünftigen Nutzerinnen und Nutzer. Humanoide Roboter an verschiedenen Orten des öffentlichen Raums - von der Kita über die Schule bis zu Museum, Stadtbibliothek und Krankenhaus - sollen Künstliche Intelligenz für Menschen erfahrbar machen. In vielfältigen Experimenten soll sowohl eine breite Sensibilisierung für KI-Technologie erreicht als auch neue Erkenntnisse für die Erforschung und Entwicklung zukünftiger KI-Roboter gewonnen werden.

Hier kommen Gesellschaft und Wissenschaft auf Augenhöhe zusammen. Durch einen bidirektionalen Wissens- und Erfahrungsaustausch zwischen Gesellschaft und Wissenschaft sollen Erkenntnisse gewonnen werden, um robotische KI-Systeme zu entwickeln, die Menschen brauchen und haben wollen. Deshalb werden mit zivilgesellschaftlichen Akteuren konkrete Anwendungen von robotischer KI in der Praxis entwickelt und deren Chancen, aber auch deren Risiken erforscht, mit dem Ziel, die Potentiale der robotischen KI für unsere Gesellschaft direkt erfahrbar und erfassbar zu machen aber auch zu entmystifizieren.

JuBot - Jung bleiben mit Robotern

- Ansprechperson:

- Förderung:

Carl-Zeiss-Stiftung

- Starttermin:

2021

- Endtermin:

2026

In einer immer älter werdenden Gesellschaft kann die Robotik einen Schlüsselbeitrag zur Unterstützung und Pflege von Senioren leisten. Auf diese Weise sollen Selbstständigkeit und Gesundheit der Senioren erhalten und gefördert werden, sowie Mitarbeitende in der Pflege unterstützt werden. Das Ziel des von der Carl-Zeiss-Stiftung geförderten Projektes ist die Entwicklung humanoider Assistenzroboter und anziehbarer Exoskelette, die personalisierte, lernende Assistenz für die Alltagsbewältigung von Senioren bieten. Dazu arbeitet am KIT ein fachübergreifendes Konsortium aus Informatikern, Maschinenbauern, Elektroingenieuren, Sportwissenschaftlern, Architekten und Vertretern der Arbeitswissenschaften und Technikfolgenabschätzung an robusten, ganzheitlichen Robotersystemen und -fähigkeiten.

Das H²T leitet die Entwicklung der assistiven Robotersysteme und koordiniert die Integration der verschiedenen Fachrichtungen in die Robotik. Die Forschungsschwerpunkte liegen dabei in der robusten Ausführung von Haushaltsaufgaben mit humanoiden Assistenzrobotern, der Personalisierung und Adaptivität von Assistenzfunktionen sowie der Entwicklung und Steuerung neuartiger Exoskelettmechanismen.

CATCH-HEMI – Patientenzentriertes Modell zur Unterstützung des klinischen Entscheidungsprozesses und der optimierten Rehabilitation bei Kindern mit halbseitiger Lähmung

- Ansprechperson:

- Förderung:

BMBF

- Starttermin:

2020

- Endtermin:

2023

Jugendliche, Kinder, Neugeborene und sogar Ungeborene können einen Schlaganfall erleiden. Ein Drittel der Kinder mit neonatalen und die Hälfte mit postnatalen Schlaganfällen entwickelt eine Halbseitenlähmung, bei der häufig die obere Extremität stärker betroffen ist als die Untere. Das Projekt zielt darauf ab, neuartige Methoden zur verbesserten therapeutischen Behandlung von Kindern mit halbseitiger Lähmung als Folge eines Schlaganfalls zu entwickeln. Das KIT entwickelt mit Hilfe von Methoden und Werkzeugen des maschinellen Lernens ein neues transdisziplinäres, patientenzentriertes Modell (PSM), das genetische Patientendaten, Neuroimaging, Bewegungsdaten und klinisches Assessment fusioniert. Das Modell soll zum Verständnis der Zusammenhänge zwischen genetischen und phänotypischen Daten bei Schlaganfall beitragen, den klinischen Entscheidungsprozess unterstützen und eine optimierte individualisierte Rehabilitation ermöglichen. Das Vorhaben ist Teil des transnationalen Verbundprojekts "CATCH-HEMI" der Förderinitiative ERA-PerMed (Personalisierte Medizin).

Wie kann sich eine Fabrik autonom an ständig neue Bedingungen anpassen? Das Forschungsvorhaben „AgiProbot“ am Karlsruher Institut für Technologie (KIT) beschäftigt sich mit genau dieser Frage. Das Projekt verfolgt das Ziel, ein agiles Produktionssystem zu gestalten, um mittels Künstlicher Intelligenz dynamisch auf ungewisse Produktspezifikationen zu reagieren. Einen beispielhaften Anwendungsfall hierfür stellt das sog. „Remanufacturing“ dar, wobei Altprodukte rückgewonnen, demontiert und ausgewählte Komponenten wieder in die Produktionsprozesse zurückgeführt werden. Die Fragestellung wird in Form einer interdisziplinären Forschungsgruppe mehrerer Institute aus Maschinenbau, Elektrotechnik und Informationstechnik sowie Informatik bearbeitet, um komplementäre Kompetenzen gezielt zu bündeln.

Robdekon: Robotersysteme für die Dekontamination in menschenfeindlichen Umgebungen

- Ansprechperson:

- Förderung:

BMBF

- Starttermin:

2018

- Endtermin:

2022

ROBDEKON steht für »Robotersysteme für die Dekontamination in menschenfeindlichen Umgebungen« und ist ein Kompetenzzentrum, welches der Erforschung von autonomen und teilautonomen Robotersystemen gewidmet ist. Diese sollen künftig eigenständig Dekontaminationsarbeiten ausführen, damit Menschen der Gefahrenzone fernbleiben können.

Ziel von ROBDEKON ist die Erforschung und Entwicklung neuartiger Robotersysteme für Dekontaminationsaufgaben. Forschungsthemen sind hierbei mobile Roboter für unwegsames Gelände, autonome Baumaschinen, Robotermanipulatoren sowie Dekontaminationskonzepte, Planungsalgorithmen, multisensorielle 3D-Umgebungskartierung und Teleoperation mittels Virtual Reality. Methoden der künstlichen Intelligenz versetzen die Roboter in die Lage, zugewiesene Aufgaben autonom oder teilautonom auszuführen.

Die Forschungsschwerpunkte des H2T innerhalb des Kompetenzzentrums ROBDEKON liegen in der Entwicklung von Methoden für das ein- und mehrhändige Greifen, die mobile Manipulation und die Planung von Manipulationsaktionen zur Handhabung kontaminierter Objekte. Dabei stellen die visuelle Wahrnehmung und autonome Ausführung von Dekontaminationsaufgaben zentrale Fragestellungen dar.

Invasives Rechnen

CC-King

- Ansprechperson:

- Förderung:

Wirtschaftsministerium BW

- Starttermin:

2020

- Endtermin:

2021

Gefördert vom Ministerium für Wirtschaft, Arbeit und Wohnungsbau des Landes Baden-Württemberg, schafft das Karlsruher Kompetenzzentrum für KI-Engineering die Verbindung zwischen KI-Spitzenforschung und etablierten Ingenieurdisziplinen. Es erforscht die Grundlagen und entwickelt die nötigen Werkzeuge, um den Einsatz von Methoden der Künstlichen Intelligenz (KI) und des maschinellen Lernens (ML) in der betrieblichen Praxis zu erleichtern. Als Anwendungsfelder stehen industrielle Produktion und Mobilität im Fokus. Unternehmen bietet das Kompetenzzentrum konkrete Unterstützung.

Das H2T erforscht in dem Projekt wie KI- und ML-Methoden zur Erzielung von nachvollziehbaren und erklärbaren Ergebnissen und Entscheidungen eingesetzt werden kann. Dazu sollen Methoden zur Propagierung von Unsicherheiten und Konfidenzen von Einzelkomponenten durch das Gesamtsystem untersucht werden.

Organisches Maschinelles Lernen (OML)

- Ansprechperson:

- Förderung:

BMBF

- Starttermin:

2019

- Endtermin:

2022

Ziel des Projekts »Organisches Maschinelles Lernen« (OML) ist es, das herkömmliche, starre Vorgehen beim Training und Einsatz maschinell lernender System aufzubrechen und Verfahren des maschinellen Lernens zu entwickeln, die dem organischen Lernen, insbesondere dem des Menschen, ähneln, in dem die Systeme während ihrer gesamten Lebenszeit lernen - insbesondere auch während des Einsatzes.

Die Art des Lernens soll organischer werden. Statt auf sehr großen, sauberen und gut strukturierten Trainingsdaten, die aufwendig aufbereitet werden mussten, zu lernen, sollen die in OML entwickelten System aus heterogenen, weniger oder gar nicht aufbereiteten Daten, wie sie in der Welt vorkommen, lernen und mit weniger Trainingsdaten auskommen können - ähnlich wie es beim Menschen der Fall ist. Unterschiedliche Quellen - zum Beispiel Interaktion mit Menschen und eigene Erfahrung - sollen multimodal kombiniert werden und das Lernen gezielter auf Fälle der Unsicherheit konzentriert werden. Dazu müssen die Systeme fähig sein zu erkennen, in welchen Fällen sie unsicher sind oder wo weiteres Lernen notwendig ist. Zudem sollen lernende Systeme nicht mehr nur ein »Schwarzer Kasten« sein, in deren Funktion Außenstehende keinen Einblick haben. Stattdessen sollen sie in der Lage sein, ihre Entscheidungsfindung, ihr Agieren zu erklären. Durch die Fähigkeit zur Rechtfertigung werden die Entscheidungen der Systeme für Menschen akzeptabler und ihr Einsatz für viele Anwendungen der realen Welt erst möglich.

Zuletzt sollen die entwickelten Systeme in einem robotischen Gesamtsystem zusammengeführt werden. In einem Szenario der interaktiven Roboterprogrammierung soll der Roboter von Grund auf neue Fähigkeiten erlernen, indem er aus physischer, visueller und verbaler Interaktion mit dem Menschen sowie eigener Erfahrung lernt - ähnlich wie ein Lehrling von seinem Meister gelehrt wird.

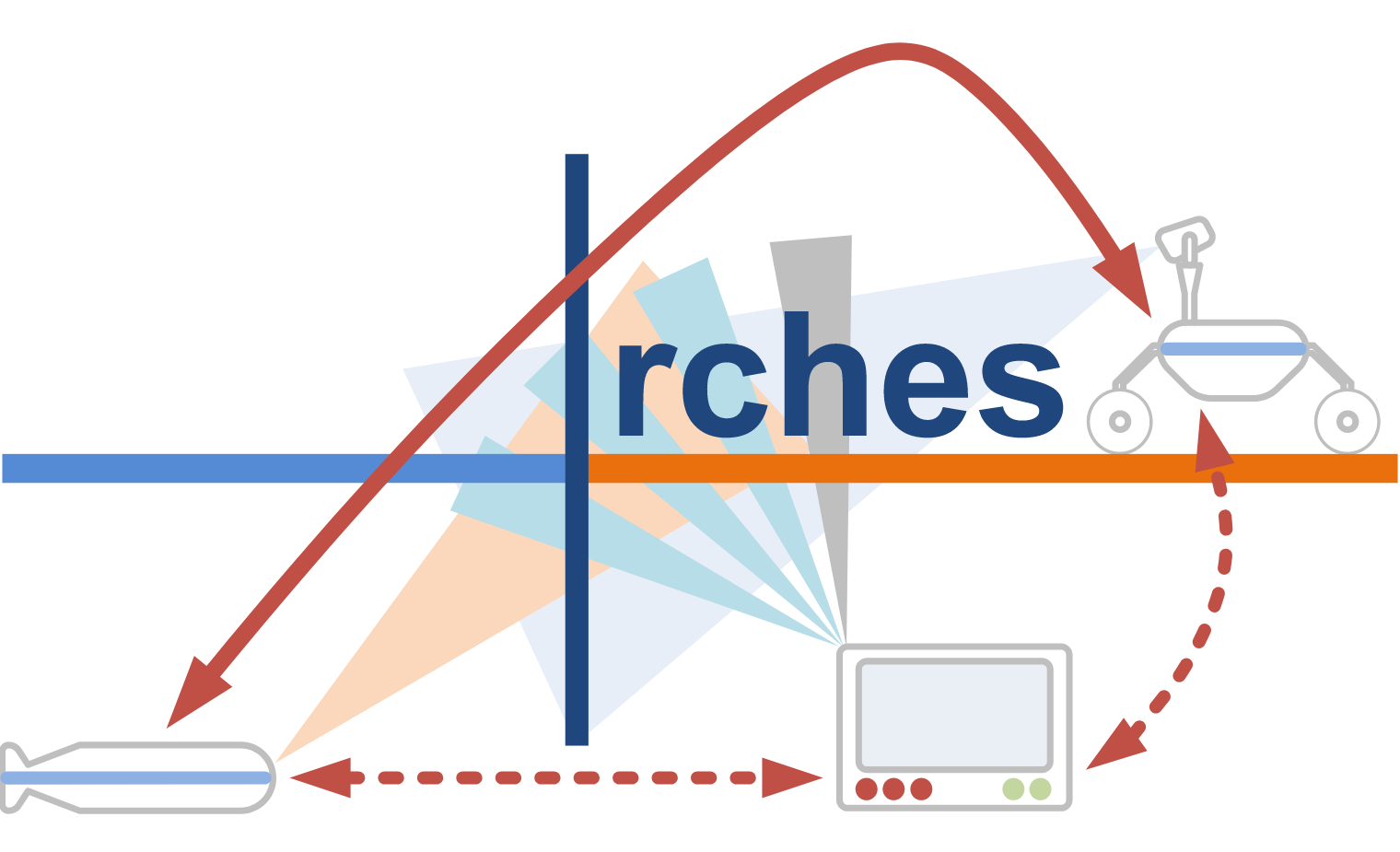

ARCHES: Autonomous Robotic Networks to Help Modern Societies

- Ansprechperson:

- Förderung:

Helmholtz

- Starttermin:

January 2018

- Endtermin:

December 2020

The aim of the Helmholtz Future Project ARCHES is the development of heterogeneous, autonomous and interconnected robotic systems in a consortium of the Helmholtz Centers DLR, AWI, GEOMAR and KIT from 2018 to 2020. The aspired fields of application are as heterogeneous as the robotic systems and span from environmental monitoring of the oceans over technical crisis intervention to the exploration of the solar system.

KIT leads the efforts to develop methods for grasping and manipulation in the domains of planetary and ocean exploration. In particular, KIT will develop a manipulation toolkit providing methods for grasp, motion and manipulation planning as well as approaches for coordinated execution of manipulation tasks in robot teams. The focus lays on providing grasping and manipulation skills for known, familiar and unknown rigid objects. The skills will be tailored to several levels of autonomy ranging from teleoperation to semi-autonomous and autonomous execution.

TERRINet: The European Robotics Research Infrastructure Network

- Ansprechperson:

- Förderung:

EU H2020 Projekt

- Starttermin:

2017

- Endtermin:

2021

TERRINet steht für die europäische Robotik-Forschungsinfrastruktur und hat zum Ziel eine neue Generation von Roboterforschern auszubilden, um zukünftige Roboter zu entwickeln. Die Partner bieten Forschern, Unternehmern, Studenten und Industriepartnern qualitativ hochwertigste Infrastrukturen, Roboter-Plattformen, exzellente Forschungsdienste und Ausbildungsmöglichkeiten an, um Forschung und Innovation auf dem Gebiet der Robotik zu fördern.

Neben dem Zugang zu hochwertigen Infrastrukturen und Diensten dient TERRINet als multidisziplinäres, transnationales Umfeld, das eine gegenseitige Befruchtung von Ideen und den Austausch exzellenter wissenschaftlicher Forschung erleichtert. Der Zugang zu Infrastrukturen, Plattformen, Ressourcen und Ideen ermöglicht es TERRINet, Testfelder und Evaluierungsmöglichkeiten für Innovationen einzurichten und den Technologietransfer in Europa voranzutreiben.

Das KIT bietet im Rahmen von TERRINet Zugang zur Robotik-Infrastruktur an, um Forschung auf den Gebieten der humanoiden Robotik und der anziehbaren Robotik durchzuführen. Dies umfasst Themen wie fortgeschrittene Regelung und Steuerung, bild- und haptisch-basiertes Greifen, mobile Manipulation, Lernen aus Beobachtung des Menschen und aus Erfahrung sowie kontextsensitive Steuerung von Prothesen und Orthesen.

IMAGINE: Robots Understanding Their Actions by Imagining Their Effects

- Ansprechperson:

- Förderung:

EU H2020 Projekt

- Starttermin:

2017

- Endtermin:

2021

IMAGINE zielt auf die Entwicklung von Methoden, die es Robotern ermöglichen, ein besseres Verständnis über die Interaktion mit ihrer Umgebung und ihrer Veränderung, zu erlangen. Dadurch sollen die Autonomie, Flexibilität und Robustheit der Manipulationsfähigkeit von Robotern bei unvorhergesehenen Änderungen verbessert werden. Der Ansatz basiert auf der Entwicklung eines kostengünstigen Greifers für die Demontage und das Recycling von elektromechanischen Geräten, wie z.B. Festplatten. Dabei soll das strukturelle Verständnis von elektromechanischen Geräten und das funktionale Verständnis von Roboteraktionen erweitert werden, um Kategorien elektromechanischer Geräte und Vorrichtungen zu demontieren; dabei den Erfolg von Aktionen zu überwachen und ggf. Korrekturstrategien vorschlagen zu können.

Das KIT ist verantwortlich für die Entwicklung eines multifunktionalen rekonfigurierbaren Greifers für die Demontage elektromechanischer Geräte. Das KIT leitet auch die Aufgaben zum Lernen von Demontageaktionen aus Beobachtung des Menschen sowie die Integration von Komponenten der Objekt- und Affordanz-Wahrnehmung, Planung, Simulation und Aktionsausführung in eine ganzheitliche Systemarchitektur, die auf mehreren funktionalen Demonstratoren evaluiert wird.

INOPRO: Intelligente Orthetik und Prothetik

- Ansprechperson:

- Förderung:

BMBF

- Starttermin:

2016

- Endtermin:

2021

In INOPRO werden personalisierte, intelligente Prothesen und Orthesen entwickelt, die einen höheren Autonomiegrad besitzen, symbiotische Interaktion mit dem Menschen, intuitive Unterstützung und bessere Anpassung an dem Menschen ermöglichen, um die Lebensqualität von Prothesen- und Orthesenträgern maßgeblich zu verbessern.

Das KIT leitet das Teilprojekt zur Entwicklung intelligenter, personalisierte Handprothesen, welche in Größe und Funktionalität an die Bedürfnisse des Nutzers angepasst sind und mit einer eingebetteten maschinellen Intelligenz ausgestattet sind, die eine intuitive Mensch-maschine-Interaktion ermöglicht und dabei die kognitive Belastung für den Nutzer reduziert. Um dieses Ziel zu erreichen, wird an der nahtlosen Integration von intelligenter Mechanik, 3D Fertigungstechniken, eingebetteten Systemen und robuster Ansteuerung mit wenigen, einfachen Steuersignalen geforscht. Um den Autonomiegrad der Prothesen im Hinblick auf das kontext-sensitive Greifen zu erhöhen, werden Methoden zur niedrigdimensionalen Repräsentation von Griffen und zum datengetriebene Lernen einer Griff-Bibliothek aus menschlicher Beobachtung sowie Algorithmen für eine ressourcengewahre Verarbeitung visueller Daten mit künstlichen neuronalen Netzen erforscht.

Weiterhin ist das KIT an der Entwicklung neuartiger Steuerungen für Beinprothesen und -orthesen beteiligt. So werden neuartige Konzepte für Gelenke und Mechanismen zur Realisierung der synergetischen Kopplung in der unteren Extremität entwickelt und Methoden zur Klassifikation von Aktionen, datengetriebenen Zustandsschätzung des Nutzer basierend auf multimodaler sensorischer Information sowie kontextsensitiven Steuerung untersucht.

SECONDHANDS: A robot assistant for industrial maintenance

- Ansprechperson:

- Förderung:

EU H2020 Projekt

- Starttermin:

2015

- Endtermin:

2020

Description

The goal of SecondHands is to design a robot that can offer help to a maintenance technician in a pro-active manner. The robot will be a second pair of hands that can assist the technician when he/she is in need of help. Thus, the robot should recognize human activity, anticipate humans needs and pro-actively offer assistance when appropriate, in real-time, and in a dynamically changing real world. The robot assistant will increase the efficiency and productivity of maintenance technicians in order to ensure a smooth running of production machinery thereby maximizing output and return on investment.

KIT leads the tasks of the design of the new robot, grasping and mobile manipulation as well as natural language and multimodal interfaces. Based on the ARMAR humanoid robot technologies, a new humanoid robot for maintenance tasks will be developed and validated in several maintenance tasks in a warehouse environment. A special focus is the development of methods for task-specific grasping of familiar objects, object handover, vision- and haptic-based reactive grasping strategies as well as mobile manipulation tasks while taking into account uncertainties in perception and action. To facilitate natural human-robot interaction, KIT will implement a speech recognition, and dialogue management system to enable maintenance technicians to conduct spoken dialog with the robot.

TIMESTORM: Mind and Time - Investigation of the temporal attributes of human-machine synergetic interaction

- Ansprechperson:

- Förderung:

EU FET-ProActive Project

- Starttermin:

2015

- Endtermin:

2018

Description

TimeStorm aims at equipping artificial systems with humanlike cognitive skills that benefit from the flow of time by shifting the focus of human-machine confluence to the temporal, short- and long-term aspects of symbiotic interaction. The integrative pursuit of research and technological developments in time perception will contribute significantly to ongoing efforts in deciphering the relevant brain circuitry and will also give rise to innovative implementations of artifacts with profoundly enhanced cognitive capacities. TimeStorm promotes time perception as a fundamental capacity of autonomous living biological and computational systems that plays a key role in the development of intelligence. In particular, time is important for encoding, revisiting and exploiting experiences (knowing), for making plans to accomplish timely goals at certain moments (doing), for maintaining the identity of self over time despite changing contexts (being).

The main role of KIT in TimeStorm is to investigate the temporal information in the perception and execution of manipulation actions and to integrate time processing mechanisms in humanoid robots. In particular, we investigate how semantic representation (top-down) and hierarchical segmentation (bottom-up) of human demonstrations based on spatio-temporal object interactions can be combined to facilitate generalization of action durations. This would allow a robot to scale perceived and learnt temporal information of an action in order to perform the same and other actions with various temporal lengths.

Robots Exploring Tools as Extensions to their Bodies Autonomously (REBA+)

- Ansprechperson:

- Förderung:

- Starttermin:

2015

- Endtermin:

2018

REBA

- enhancing the scope from the body morphology to a representation of body-tool-environment linkages

- enhancing the scope from a representation of morphology to a representation that includes control

- enhancing the scope from minimal DOF systems to systems that offer and exploit redundant degrees of freedom

Description

The I-SUPPORT project envisions the development and integration of an innovative, modular, ICT-supported service robotics system that supports and enhances frail older adults’ motion and force abilities and assists them in successfully, safely and independently completing the entire sequence of bathing tasks, such as properly washing their back, their upper parts, their lower limbs, their buttocks and groin, and to effectively use the towel for drying purposes. Advanced modules of cognition, sensing, context awareness and actuation will be developed and seamlessly integrated into the service robotics system to enable the robotic bathing system.

KIT leads the tasks concerning the learning motion primitives from human observation and kinesthetic teaching for a soft robot arm which should provide help in washing and drying tasks. The learned motion primitives should be represented in a way, which allow the adaptation to different context (soaping, washing, drying), body parts (back, upper lower limbs, neck) and users. To achieve this, adaptive representations will be developed of the learned motion primitives which are able to account for the different requirements such as encoding different motion styles (circular and linear), adaptation to different softness of different body parts, etc. To enable the handling of different washing and trying tools with varying softness by the robot arm, we will investigate how motion primitives can be enriched with models which encode correlations between objects properties and action parameters. Furthermore, KIT is addressing the task of personalization and adaptation of the robotic bathing system to the user by taking into the users‘ preference and previous sensorimotor experience. Based on a reference model of the human body, the Master Motor Map, which defines the kinematics and dynamics of the human body with regard to global body parameters such as height, weight, we will derive individual models of the different users. These models will be used to generate initial washing and/or drying behavior which will be refined based on sensorimotor experience obtained from the robot arm

WALK-MAN: Whole-body Adaptive Locomotion and Manipulation

- Ansprechperson:

- Förderung:

EU-FP7

- Starttermin:

2013

- Endtermin:

2017

Description

WALK-MAN targets at enhancing the capabilities of existing humanoid robots, permitting them to assist or replace humans in emergency situations including rescue operations in damaged or dangerous sites like destroyed buildings or power plants. The WALK-MAN robot will demonstrate human-level capabilities in locomotion, balance and manipulation. The scenario challenges the robot in several ways: Walking on unstructured terrain, in cluttered environments, among a crowd of people as well as crawling over a debris pile. The project's results will be evaluated using realistic scenarios, also consulting civil defence bodies.

KIT leads the tasks concerning multimodal perception for loco-manipulation and the representation of whole-body affordances. The partly unknown environments, in which the robot has to operate, motivate an exploration-based approach to perception. This approach will integrate whole-body actions and multimodal perceptual modalities such as visual, haptic, inertial and proprioceptive sensory information. For the representation of whole-body affordances, i.e. co-joint perception-action representations of whole-body actions associated with objects and/or environmental elements, we will rely on our previous work on Object-Action Complexes (OAC), a grounded representation of sensorimotor experience, which binds objects, actions, and attributes in a causal model and links sensorimotor information to symbolic information. We will investigate the transferability of grasping OACs to balancing OACs, inspired by the analogy between a stable whole-body configuration and a stable grasp of an object.

KoroiBot: Improving humanoid walking capabilities by human-inspired mathematical models, optimization and learning

- Ansprechperson:

- Förderung:

EU-FP7

- Starttermin:

2013

- Endtermin:

2016

Description

KoroiBot is a three years project funded by the European Commission under FP7-ICT-2013-10. The goal of the project is to investigate the way humans walk, e.g., on stairs and slopes, on soft and slippery ground, over beams and seesaws and create mathematical models and learning methods for humanoid walking. The project will study human walking, develop techniques for increased humanoid walking performance and evaluate them on existing state of the art humanoid robots.

KIT leads the tasks concerning human walking experiments, the establishment of large scale human walking database and the development of human and humanoid models as basis for the development of general motion and control laws transfer rules between different embodiments and for the generation of different walking types. The developed models and transfer rules, we will study how to implement balancing push recovery strategies to deal with different types of perturbation in free and constrained situations. Furthermore, we will investigate the role of prediction in walking as well as the role different sensory feedback such as vision, vestibular and foot haptics in balancing. Therefore, we will implement a control and action selection schema emphasizing predictive control mechanisms, which rely on the estimation of expected perturbation based on multimodal sensory feedback and past sensorimotor experience. The control schema will be validated in the context of prediction and selection of push recovery actions.

XPERIENCE: Robots Bootstrapped through Learning from Experience

- Ansprechperson:

- Förderung:

EU-FP7

- Starttermin:

2011

- Endtermin:

2015

Description

Current research in enactive, embodied cognition is built on two central ideas: 1) Physical interaction with and exploration of the world allows an agent to acquire and extend intrinsically grounded, cognitive representations and, 2) representations built from such interactions are much better adapted to guiding behaviour than human crafted rules or control logic. Exploration and discriminative learning, however are relatively slow processes. Humans, on the other hand, are able to rapidly create new concepts and react to unanticipated situations using their experience. “Imagining” and “internal simulation”, hence generative mechanisms which rely on prior knowledge are employed to predict the immediate future and are key in increasing bandwidth and speed of cognitive development. Current artificial cognitive systems are limited in this respect as they do not yet make efficient use of such generative mechanisms for the extension of their cognitive properties.

Solution

The Xperience project will address this problem by structural bootstrapping, an idea taken from child language acquisition research. Structural bootstrapping is a method of building generative models, leveraging existing experience to predict unexplored action effects and to focus the hypothesis space for learning novel concepts. This developmental approach enables rapid generalization and acquisition of new knowledge and skills from little additional training data. Moreover, thanks to shared concepts, structural bootstrapping enables enactive agents to communicate effectively with each other and with humans. Structural bootstrapping can be employed at all levels of cognitive development (e.g. sensorimotor, planning, communication).

Project Goals

- Xperience will demonstrate that state‐of‐the‐art enactive systems can be significantly extended by using structural bootstrapping to generate new knowledge. This process is founded on explorative knowledge acquisition, and subsequently validated through experience‐based generalization.

- Xperience will implement, adapt, and extend a complete robot system for automating introspective, predictive, and interactive understanding of actions and dynamic situations. Xperience will evaluate, and benchmark this approach on existing state‐of‐the‐art humanoid robots, integrating the different components into a complete system that can interact with humans.

Expected Impact

By equipping embodied artificial agents with the means to exploit prior experience via generative inner models, the methods to be developed here are expected to impact a wide range of autonomous robotics applications that benefit from efficient learning through exploration, predictive reasoning and external guidance.

HEiKA-EXO: Optimization-based development and control of an exoskeleton for medical applications

- Ansprechperson:

- Förderung:

HEiKA

- Starttermin:

Januar 2013

- Endtermin:

Dezember 2013

Description

About GRASP

GRASP is an Integrated Project funded by the European Commission through its Cognition Unit under the Information Society Technologies of the seventh Framework Programme (FP7). The project was launched on 1st of March 2008 and will run for a total of 48 months.

The aim of GRASP is the design of a cognitive system capable of performing grasping and manipulation tasks in open-ended environments, dealing with novelty, uncertainty and unforeseen situations. To meet the aim of the project, studying the problem of object manipulation and grasping will provide a theoretical and measurable basis for system design that is valid in both human and artificial systems. This is of utmost importance for the design of artificial cognitive systems that are to be deployed in real environments and interact with humans and other agents. Such systems need the ability to exploit the innate knowledge and self-understanding to gradually develop cognitive capabilities. To demonstrate the feasibility of our approach, we will instantiate, implement and evaluate our theories and hypotheses on robot systems with different embodiments and complexity.

GRASP goes beyond the classical perceive-act or act-perceive approach and implements a predict-act-perceive paradigm that originates from findings of human brain research and results of mental training in humans where the self-knowledge is retrieved through different emulation principles. The knowledge of grasping in humans can be used to provide the initial model of the grasping process that then has to be grounded through introspection to the specific embodiment. To achieve open-ended cognitive behaviour, we use surprise to steer the generation of grasping knowledge and modelling

About PACO-PLUS

PACO-PLUS is an integrated Project funded by the European Commission through its Cognition Unit under the Information Society Technologies of the sixth Framework Programme (FP6). The project was launched on 1st of February 2006 and will run for a total of 48 months.

PACO-PLUS brings together an interdisciplinary research team to design and build cognitive robots capable of developing perceptual, behavioural and cognitive categories that can be used, communicated and shared with other humans and artificial agents. To demonstrate our approach we are building robot systems that will display increasingly advanced cognitive capabilities over the course of the programme. They will learn to operate in the real world and to interact and communicate with humans. To do this they must model and reflectively reason about their perceptions and actions in order to learn, act and react appropriately. We hypothesize that such understanding can only be attained by embodied agents and requires the simultaneous consideration of perception and action.

Our approach rests on three foundational assumptions:- Objects and Actions are inseparably intertwined in cognitive processing; that is “Object-Action Complexes” (OACs) are the building blocks of cognition.

- Cognition is based on reflective learning, contextualizing and then reinterpreting OACs to learn more abstract OACs, through a grounded sensing and action cycle.

- The core measure of effectiveness for all learned cognitive structures is: Do they increase situation reproducibility and/or reduce situational uncertainty in ways that allow the agent to achieve its goals?

Sonderforschungsbereich 588: Lernende and kooperierende multimodale humanoide Roboter (SFB 588)

- Ansprechperson:

- Förderung:

- Starttermin:

01.07.2001

- Endtermin:

30.06.2012

Description

The Collaborative Research Center 588 "Humanoid Robots - Learning and Cooperating Multimodal Robots" was established on the 1st of July 2001 by the Deutsche Forschungs-gemeinschaft (DFG) and will run until June 30th, 2012.

The goal of this project is to generate concepts, methods and concrete mechatronical components for a humanoid robot, which will be able to share his activity space with a human partner. With the aid of this partially anthromorphic robot system, it will be possible to step out of the "robot cage" to realise a direct contact to humans.

The term multimodality includes the communication modalities intuitive for the user such as speech, gesture and haptics (physical contact between the human and the robot), which will be used to command or instruct the robot system directly.

Concerning the cooperation between the user and the robot - for example in the joint manipulation of objects - it is important for the robot to recognise the human's intention, to remember the acts that have already been carried out together and to apply this knowledge correctly in the individual case. Great effort is spent on safety, as this is a very important aspect of the man-machine-cooperation.

An outstanding property of the system is its ability to learn. The reason for this is the possibility to lead the system to new, formerly unknown problems, for example to new terms and new objects. Even new motions will be learned with the aid of the human and they can be corrected in an interactive way by the user.

The Collaborative Research Center 588 is assigned to the Department of Informatics. More than 40 scientists and 13 institutes are involved in this project. They belong to the department of Informatics, the Faculty of Electrical and Information Engineering, the Faculty of Mechanical Engineering and Faculty of Humanities and Social Sciences as well as Fraunhofer Institute of Optronics, System Technologies and Image Exploitation (IOSB) and the Forschungszentrum Informatik Karlsruhe (FZI).

EU FET Flagship Initiative "Robot Companions for Citizens"

- Ansprechperson:

- Förderung:

EU-FET

- Starttermin:

2011

- Endtermin:

2012

Description

The coordination action CA-RoboCom will design and describe the FET Flagship initiative “Robot Companions for Citizens” (RCC) including its: Scientific and Technological framework, governance, financial and legal structure, funding scheme, competitiveness strategy and risk analysis.

The FET Flagship initiative RCC will realize a unique and unforeseen multidisciplinary science and engineering program supporting a radically new approach towards machines and how we deploy them in our society.

Robot Companions for Citizens is an ecology of sentient machines that will help and assist humans in the broadest possible sense to support and sustain our welfare. RCC will have soft bodies based on the novel integration of solid articulated structures with flexible properties and display soft behavior based on new levels of perceptual, cognitive and emotive capabilities. RCC will be cognizant and aware of their physical and social world and respond accordingly. RCC will attain these properties because of their grounding in the most advanced sentient machines we know: animals.

Robot Companions for Citizens will validate our understanding of the general design principles underlying biological bodies and brains, establishing a positive feedback between science and engineering.

Driven by the vision and ambition of RCC, CA-RoboCom will, by means of an appropriate outreach strategy, involve all pertinent stakeholders: science and technology, society, finance, politics and industry. Other than the commitment of its Consortium, CA-RoboCom will involve a wide range of external experts in its working groups, its Advisory Board, and in its European and International Cooperation board. The CA-RoboCom consortium believes that given the potential transformative and disruptive effects of RCC in our society their development and deployment has to be based on a the broadest possible support platform.