Subproject D1 will explore the specific benefits and restrictions of invasive architectures in challenging real-time embedded systems and in particular in humanoid robotics. We will investigate the implementation of a cognitive robot control architecture with its different processing hierarchies, both on invasive TCPA and RISC-based MPSoC. The goal is to explore techniques of self-organisation to efficiently allocate available resources for the timely varying requirements of robotic applications. We expect that less computing resources are needed to fulfill the application requirements compared to traditional resource assignment at compile-time.

HARIA - Human-Robot Sensorimotor Augmentation

Wearable Sensorimotor Interfaces and Supernumerary Robotic Limbs for Humans with Upper-Limb Disabilities

- Contact:

- Funding:

EU HORIZON-CL4-2021 Projekt

- Startdate:

2022

- Enddate:

2026

HARIA aims at laying the foundations of a new research field: human sensorimotor augmentation. To this end, HARIA will develop AI-powered supernumerary robotic limbs with wearable sensorimotor interfaces to enable users to directly control and feel the extra robotic limbs by exploiting the redundancy of the human sensorimotor system. HARIA addresses people with uni- or bi-lateral upper-limb chronic motor disabilities, improving their quality of life.

H²T will develop an AI-powered framework integrating affordance-based scene representations, user-centered and context sensitive adaptation of robot skills, and role assignment in human-robot manipulation tasks with different levels of complexity: i) unimanual manipulation, ii) bimanual human-robot co-manipulation, and iii) bimanual robotic manipulation.

euROBIN - European ROBotics and AI Network

- Contact:

- Funding:

EU H2020 Project

- Startdate:

2022

- Enddate:

2026

euROBIN is the Network of Excellence that brings together European expertise on Robotics and AI. It establishes a unified pan-European platform for research and development. For the first time, a large number of distinguished research labs across Europe are jointly researching AI-Based Robotics. Goals include both significant scientific advances on core questions of AI-based robotics as well as strengthening the scientific robotics community in Europe by providing an integrative community platform. The network is open to the entire robotics community and provides mechanisms of cascade funding to double its number of members over the next years.

euROBIN comprises 31 partners across 14 countries. It is coordinated by the Institute of Robotics of Mechatronics of the German Aerospace Center and includes the highest-profile research institutions as well as outstanding industrial partners across sectors. The network was awarded 12.5 Million Euros by the EU and Switzerland in total and launched on July 1st, 2022.

H²T is contributing to the application domain “personal robotics” and science domain “robot learning” with the goal of developing assistive humanoid robots with advanced abilities to perform tasks in human-centered environments while learning from humans and interaction with the world with special focus on the transferability of skills to different robots.

RealWorldLab robotics AI

- Contact:

- Funding:

Ministry of Science, Research and the Arts Baden-Württemberg as part of the state's "digital@bw" digitization strategy

- Startdate:

2021

- Enddate:

2023

At the KIT RealWorldLab robotics AI, humanoid robots as embodied artificial intelligence (AI) meet their potential future users. Humanoid robots at various locations in public space - from daycare centers and schools to museums, public libraries, and hospitals - are to make artificial intelligence tangible for humans. In diverse experiments, both a broad sensitization for AI technology is to be achieved and new insights for the research and development of future AI robots are to be gained.

Here, society and science come together at eye level. Through a bidirectional exchange of knowledge and experience between society and science, insights are to be gained to develop robotic AI systems that people need and want. Therefore, concrete applications of robotic AI in practice are developed with civil society actors and their opportunities, but also their risks are explored, with the goal of making the potentials of robotic AI directly tangible and graspable for our society but also demystifying them.

JuBot - Staying young with robots

- Contact:

- Funding:

Carl-Zeiss-Foundation

- Startdate:

2021

- Enddate:

2026

In an aging society, robotics can make a key contribution to support and care for elderly people. By these means, autonomy and health of elderly people shall be fostered and preserved and caregivers shall be supported. The project funded by the Carl-Zeiss-Foundation aims at the development of humanoid assistive robots and wearable exoskeletons that provide personalized, learning assistance for elderly people to master their daily life. An interdisciplinary consortium of computer scientists, mechanical and electrical engineers, sport scientists, architects and researchers in ergonomics and technology assessment at KIT is developing robust, integrated robotic skills and systems.

H²T is leading the development of the assistive robots and coordinates the integration of the different research areas into robotics. Our research is focused on robust household tasks for humanoid assistive robots, the personalization and adaptivity of assistance as well as the development and control of novel mechanisms for exoskeletons.

CATCH-HEMI – Patient-centered model to support the clinical decision-making process and optimized rehabilitation for children with hemiplegia

- Contact:

- Funding:

BMBF

- Startdate:

2020

- Enddate:

2023

Adolescents, children, neonates and even the unborn can suffer a stroke. One-third of children with neonatal strokes and half with postnatal strokes develop hemiplegia, often affecting the upper extremity more than the lower. The project aims at developing novel methods for improved therapeutic assessment of children with hemiplegia as a result of a stroke. Using machine learning methods and tools, KIT is developing a new transdisciplinary patient-specific model that combines features from omics, neuroimaging, movement data and clinical assessment. The model should contribute to a better understanding of the relationship between genetic and phenotypic data in stroke and thus support the clinical decision-making process and enable an optimized individualized rehabilitation. The project is part of the transnational collaborative project "CATCH-HEMI" of the funding initiative ERA-PerMed (Personalised Medicine).

How can a factory autonomously adapt to ever changing conditions? The "AgiProbot" research project at the Karlsruhe Institute of Technology (KIT) is addressing precisely this question. The project pursues the goal of designing an agile production system to dynamically react to uncertain product specifications by means of artificial intelligence. An exemplary use case for this is the so-called "remanufacturing", whereby end-of-life products are recovered, disassembled, and selected components are fed back into the production processes. The issue is being addressed in the form of an interdisciplinary research group of several institutes from mechanical engineering, electrical engineering and information technology as well as computer science in order to bundle complementary competences in a targeted manner.

Robdekon: Robot systems for the decontamination in misanthropic environments

- Contact:

- Funding:

BMBF

- Startdate:

2018

- Enddate:

2022

ROBDEKON stands for "Robot Systems for Decontamination in Hostile Environments" and is a competence center dedicated to the research of autonomous and semi-autonomous robot systems. In the future, these systems are to carry out decontamination work independently so that people can stay away from the danger zone.

The aim of ROBDEKON is to research and develop novel robot systems for decontamination tasks. Research topics include mobile robots for rough terrain, autonomous construction machines, robot manipulators as well as decontamination concepts, planning algorithms, multisensory 3D environment mapping and tele-operation using virtual reality. Artificial intelligence methods enable the robots to perform assigned tasks autonomously or semi-autonomously.

The research focus of H2T within the ROBDEKON competence center is on the development of methods for single and multi-handed gripping, mobile packaging and planning of manipulation actions for handling contaminated objects. The visual perception and autonomous execution of decontamination tasks are central issues in this context.

Invasive Computing

Supported by the Ministry of Economics, Labor and Housing of the State of Baden-Württemberg, the Karlsruhe Competence Center for AI Engineering creates the link between top-level AI research and established engineering disciplines. It researches the fundamentals and develops the necessary tools to facilitate the use of artificial intelligence (AI) and machine learning (ML) methods in business practice. The focus is on industrial production and mobility as fields of application. The competence center offers concrete support to companies.

In the project, H2T investigates how AI and ML methods can be used to achieve comprehensible and explainable results and decisions. To this end, methods for propagating uncertainties and confidence of individual components through the overall system are to be investigated.

The aim of the project "Organic Machine Learning" (OML) is to break up the conventional, rigid approach to training and deployment of machine learning systems and to develop machine learning methods that are similar to organic learning, especially human learning, in which systems learn throughout their lifetime - especially during deployment.

The way of learning should become more organic. Instead of learning on very large, clean, and well-structured training data that had to be elaborately prepared, the systems developed in OML should learn from heterogeneous, less or not at all prepared data, as it is the case in the world, and be able to get by with less training data - similar to the human being. Different sources - for example, interaction with people and personal experience - are to be combined multimodally and learning is to be focused more specifically on cases of uncertainty. To achieve this, the systems must be able to recognize in which cases they are uncertain or where further learning is necessary. Moreover, learning systems should no longer be just a "black box" whose function outsiders have no insight into. Instead, they should be able to explain their decision making, their actions. The ability to justify their decisions makes the systems' decisions more acceptable to humans and their use for many applications in the real world possible.

Finally, the developed systems are to be brought together in a robotic overall system. In a scenario of interactive robot programming, the robot is to learn new skills from scratch by learning from physical, visual and verbal interaction with humans as well as from its own experience - similar to how an apprentice is taught by his master.

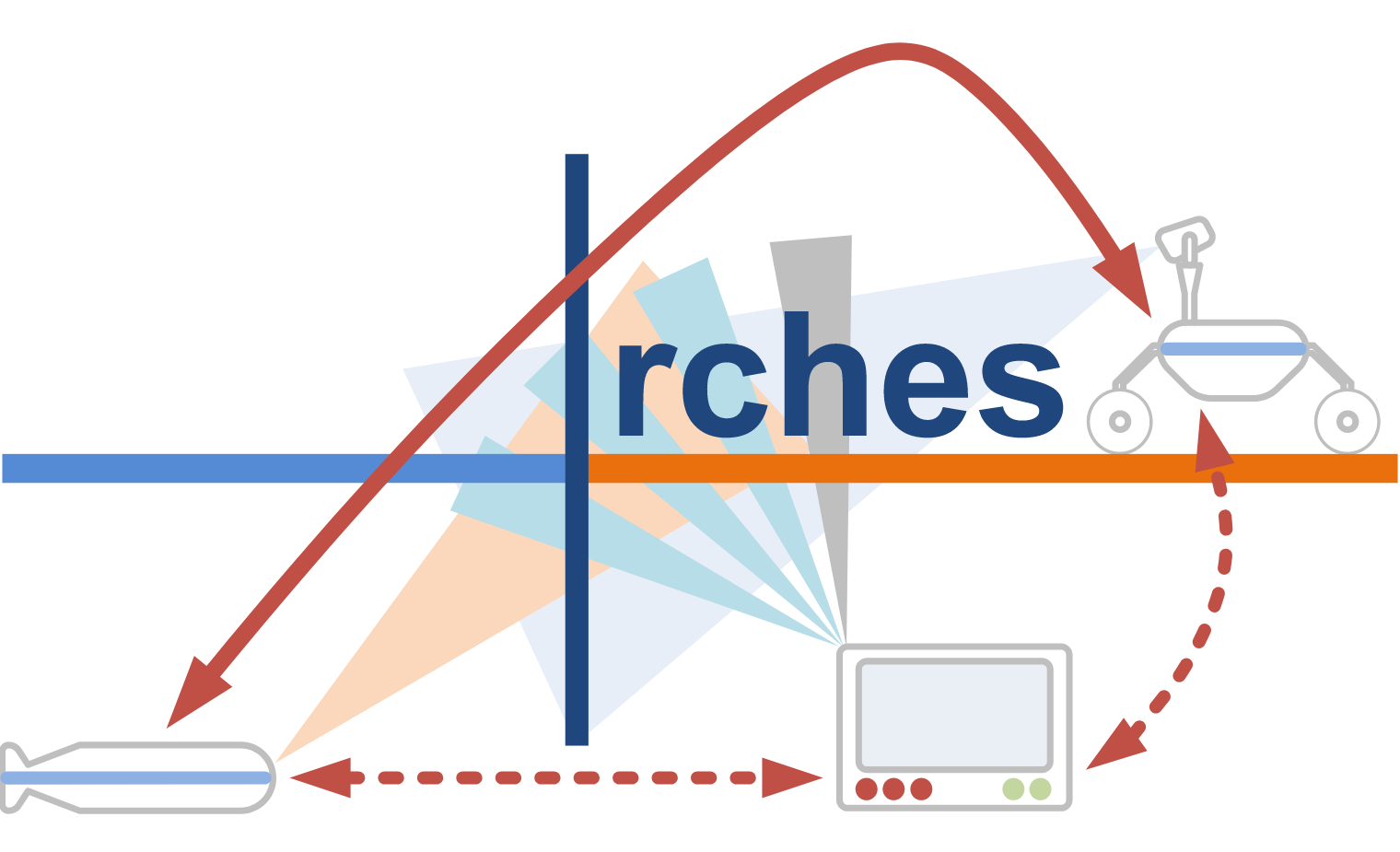

ARCHES: Autonomous Robotic Networks to Help Modern Societies

- Contact:

- Funding:

Helmholtz

- Startdate:

January 2018

- Enddate:

December 2020

The aim of the Helmholtz Future Project ARCHES is the development of heterogeneous, autonomous and interconnected robotic systems in a consortium of the Helmholtz Centers DLR, AWI, GEOMAR and KIT from 2018 to 2020. The aspired fields of application are as heterogeneous as the robotic systems and span from environmental monitoring of the oceans over technical crisis intervention to the exploration of the solar system.

KIT leads the efforts to develop methods for grasping and manipulation in the domains of planetary and ocean exploration. In particular, KIT will develop a manipulation toolkit providing methods for grasp, motion and manipulation planning as well as approaches for coordinated execution of manipulation tasks in robot teams. The focus lays on providing grasping and manipulation skills for known, familiar and unknown rigid objects. The skills will be tailored to several levels of autonomy ranging from teleoperation to semi-autonomous and autonomous execution.

TERRINet: The European Robotics Research Infrastructure Network

- Contact:

- Funding:

EU H2020 Projekt

- Startdate:

2017

- Enddate:

2021

TERRINet stands for the European robotics research infrastructure and aims to train a new generation of robotic researchers to develop future robots. The partners offer researchers, entrepreneurs, students and industrial partners high quality infrastructures, robotic platforms, excellent research services and training opportunities to support research and innovation in robotics.

In addition to providing access to high-quality infrastructures and services, TERRINet serves as a multidisciplinary, transnational environment that facilitates cross-fertilization of ideas and the exchange of excellent scientific research. Access to infrastructures, platforms, resources and ideas enables TERRINet to establish test beds and evaluation facilities for innovation and to promote technology transfer in Europe.

Within the framework of TERRINet, KIT provides access to robotics infrastructure to conduct research in the fields of humanoid robotics and attractable robotics. This includes topics such as advanced regulation and control, image- and haptic-based gripping, mobile packaging, learning from human observation and experience, and context-sensitive control of prostheses and orthoses.

IMAGINE: Robots Understanding Their Actions by Imagining Their Effects

- Contact:

- Funding:

EU H2020 Project

- Startdate:

2017

- Enddate:

2021

IMAGINE aims to develop methods that enable robots to gain a better understanding of how they interact with their environment and how they change. This should improve the autonomy, flexibility and robustness of robots' manipulation capabilities in the face of unforeseen changes. The approach is based on the development of a low-cost gripper for the dismantling and recycling of electromechanical devices, such as hard disks. The structural understanding of electromechanical devices and the functional understanding of robot actions will be extended to disassemble categories of electromechanical devices and appliances, to monitor the success of actions and to propose corrective strategies if necessary.

KIT is responsible for the development of a multifunctional reconfigurable gripper for disassembling electromechanical devices. KIT also manages the tasks of learning disassembly actions from human observation and the integration of components of object and affordance perception, planning, simulation, and action execution into a holistic system architecture that is evaluated on several functional demonstrators.

In INOPRO, personalized, intelligent prostheses and orthoses are being developed which have a higher degree of autonomy, enable symbiotic interaction with the human being, intuitive support and better adaptation to the human being, in order to significantly improve the quality of life of prosthesis and orthosis wearers.

The KIT is leading the subproject for the development of intelligent, personalized hand prostheses that are adapted to the user's needs in terms of size and functionality and are equipped with embedded machine intelligence that enables intuitive human-machine interaction while reducing the cognitive load on the user. To achieve this goal, research is being conducted on the seamless integration of intelligent mechanics, 3D manufacturing techniques, embedded systems and robust control with few, simple control signals. To increase the degree of autonomy of prostheses with respect to context-sensitive grasping, methods for low-dimensional representation of handles and data-driven learning of a handle library from human observation as well as algorithms for resource-efficient processing of visual data with artificial neural networks are investigated.

Furthermore, the KIT is involved in the development of novel controls for leg prostheses and orthoses. Thus, novel concepts for joints and mechanisms for the realization of synergistic coupling in the lower extremity are developed and methods for the classification of actions, data-driven estimation of the user's state based on multimodal sensory information, and context-sensitive control are investigated.

SECONDHANDS: A robot assistant for industrial maintenance

- Contact:

- Funding:

EU H2020 Project

- Startdate:

2015

- Enddate:

2020

Description

The goal of SecondHands is to design a robot that can offer help to a maintenance technician in a pro-active manner. The robot will be a second pair of hands that can assist the technician when he/she is in need of help. Thus, the robot should recognize human activity, anticipate humans needs and pro-actively offer assistance when appropriate, in real-time, and in a dynamically changing real world. The robot assistant will increase the efficiency and productivity of maintenance technicians in order to ensure a smooth running of production machinery thereby maximizing output and return on investment.

KIT leads the tasks of the design of the new robot, grasping and mobile manipulation as well as natural language and multimodal interfaces. Based on the ARMAR humanoid robot technologies, a new humanoid robot for maintenance tasks will be developed and validated in several maintenance tasks in a warehouse environment. A special focus is the development of methods for task-specific grasping of familiar objects, object handover, vison- and haptic-based reactive grasping strategies as well as mobile manipulation tasks while taking into account uncertainties in perception and action. To facilitate natural human-robot interaction, KIT will implement a speech recognition, and dialogue management system to enable maintenance technicians to conduct spoken dialog with the robot.

TIMESTORM: Mind and Time - Investigation of the temporal attributes of human-machine synergetic interaction

- Contact:

- Funding:

EU FET-ProActive Project

- Startdate:

2015

- Enddate:

2018

Description

TimeStorm aims at equipping artificial systems with humanlike cognitive skills that benefit from the flow of time by shifting the focus of human-machine confluence to the temporal, short- and long-term aspects of symbiotic interaction. The integrative pursuit of research and technological developments in time perception will contribute significantly to ongoing efforts in deciphering the relevant brain circuitry and will also give rise to innovative implementations of artifacts with profoundly enhanced cognitive capacities. TimeStorm promotes time perception as a fundamental capacity of autonomous living biological and computational systems that plays a key role in the development of intelligence. In particular, time is important for encoding, revisiting and exploiting experiences (knowing), for making plans to accomplish timely goals at certain moments (doing), for maintaining the identity of self over time despite changing contexts (being).

The main role of KIT in TimeStorm is to investigate the temporal information in the perception and execution of manipulation actions and to integrate time processing mechanisms in humanoid robots. In particular, we investigate how semantic representation (top-down) and hierarchical segmentation (bottom-up) of human demonstrations based on spatio-temporal object interactions can be combined to facilitate generalization of action durations. This would allow a robot to scale perceived and learnt temporal information of an action in order to perform the same and other actions with various temporal lengths.

Robots Exploring Tools as Extensions to their Bodies Autonomously (REBA+)

- Contact:

- Funding:

- Startdate:

2015

- Enddate:

2018

REBA

While neuroscientific research is unravelling a remarkable complexity and flexibility of body representations of biological agents during actions and the use of tools (Cardinali et al., 2012; Umilta et al., 2008; Maravita and Iriki, 2004), available approaches for representing body schemas for robots (Hoffmann et al., 2010a) still largely lack equally sophisticated, adaptive and dynamically extensible representations. Associated and largely open challenges are rich representations that marry body morphology, control, and the exploitation of redundant degrees of freedom in representations that offer strong priors for rapid learning that in turn support a flexible adaptation and extension of these representations to realize capabilities such as tool use or graceful degradation in case of malfunction of parts of the body tree. This motivates the present project: to develop, implement and evaluate for a robot rich extensions of its body schema, along with learning algorithms that use these representations as strong priors in order to enable rapid and autonomous usage of tools and a flexible coping with novel mechanical linkages between the body, the grasped tool and target objects. As a major scientific contribution to Autonomous Learning we aim to address these key aspects of interaction learning:

- enhancing the scope from the body morphology to a representation of body-tool-environment linkages

- enhancing the scope from a representation of morphology to a representation that includes control

- enhancing the scope from minimal DOF systems to systems that offer and exploit redundant degrees of freedom

Description

The I-SUPPORT project envisions the development and integration of an innovative, modular, ICT-supported service robotics system that supports and enhances frail older adults’ motion and force abilities and assists them in successfully, safely and independently completing the entire sequence of bathing tasks, such as properly washing their back, their upper parts, their lower limbs, their buttocks and groin, and to effectively use the towel for drying purposes. Advanced modules of cognition, sensing, context awareness and actuation will be developed and seamlessly integrated into the service robotics system to enable the robotic bathing system.

KIT leads the tasks concerning the learning motion primitives from human observation and kinesthetic teaching for a soft robot arm which should provide help in washing and drying tasks. The learned motion primitives should be represented in a way, which allow the adaptation to different context (soaping, washing, drying), body parts (back, upper lower limbs, neck) and users. To achieve this, adaptive representations will be developed of the learned motion primitives which are able to account for the different requirements such as encoding different motion styles (circular and linear), adaptation to different softness of different body parts, etc. To enable the handling of different washing and trying tools with varying softness by the robot arm, we will investigate how motion primitives can be enriched with models which encode correlations between objects properties and action parameters. Furthermore, KIT is addressing the task of personalization and adaptation of the robotic bathing system to the user by taking into the users‘ preference and previous sensorimotor experience. Based on a reference model of the human body, the Master Motor Map, which defines the kinematics and dynamics of the human body with regard to global body parameters such as height, weight, we will derive individual models of the different users. These models will be used to generate initial washing and/or drying behavior which will be refined based on sensorimotor experience obtained from the robot arm.

WALK-MAN: Whole-body Adaptive Locomotion and Manipulation

- Contact:

- Funding:

EU-FP7

- Startdate:

2013

- Enddate:

2017

Description

WALK-MAN targets at enhancing the capabilities of existing humanoid robots, permitting them to assist or replace humans in emergency situations including rescue operations in damaged or dangerous sites like destroyed buildings or power plants. The WALK-MAN robot will demonstrate human-level capabilities in locomotion, balance and manipulation. The scenario challenges the robot in several ways: Walking on unstructured terrain, in cluttered environments, among a crowd of people as well as crawling over a debris pile. The project's results will be evaluated using realistic scenarios, also consulting civil defence bodies.

KIT leads the tasks concerning multimodal perception for loco-manipulation and the representation of whole-body affordances. The partly unknown environments, in which the robot has to operate, motivate an exploration-based approach to perception. This approach will integrate whole-body actions and multimodal perceptual modalities such as visual, haptic, inertial and proprioceptive sensory information. For the representation of whole-body affordances, i.e. co-joint perception-action representations of whole-body actions associated with objects and/or environmental elements, we will rely on our previous work on Object-Action Complexes (OAC), a grounded representation of sensorimotor experience, which binds objects, actions, and attributes in a causal model and links sensorimotor information to symbolic information. We will investigate the transferability of grasping OACs to balancing OACs, inspired by the analogy between a stable whole-body configuration and a stable grasp of an object.

KoroiBot: Improving humanoid walking capabilities by human-inspired mathematical models, optimization and learning

- Contact:

- Funding:

EU-FP7

- Startdate:

2013

- Enddate:

2016

Description

KoroiBot a three years project funded by the European Commission under FP7-ICT-2013-10. The goal of the project is to investigate the way humans walk, e.g., on stairs and slopes, on soft and slippery ground, over beams and seesaws and create mathematical models and learning methods for humanoid walking. The project will study human walking, develop techniques for increased humanoid walking performance and evaluate them on existing state of the art humanoid robots.

KIT leads the tasks concerning human walking experiments, the establishment of large scale human walking database and the development of human and humanoid models as basis for the development of general motion and control laws transfer rules between different embodiments and for the generation of different walking types. The developed models and transfer rules, we will study how to implement balancing and push recovery strategies to deal with different types of perturbation in free and constrained situations. Furthermore, we will investigate the role of prediction in walking as well as the role different sensory feedback such as vision, vestibular and foot haptics in balancing. Therefore, we will implement a control and action selection schema emphasizing predictive control mechanisms, which rely on the estimation of expected perturbation based on multimodal sensory feedback and past sensorimotor experience. The control schema will be validated in the context of prediction and selection of push recovery actions.

XPERIENCE: Robots Bootstrapped through Learning from Experience

- Contact:

- Funding:

EU-FP7

- Startdate:

2011

- Enddate:

2015

Description

Current research in enactive, embodied cognition is built on two central ideas: 1) Physical interaction with and exploration of the world allows an agent to acquire and extend intrinsically grounded, cognitive representations and, 2) representations built from such interactions are much better adapted to guiding behaviour than human crafted rules or control logic. Exploration and discriminative learning, however are relatively slow processes. Humans, on the other hand, are able to rapidly create new concepts and react to unanticipated situations using their experience. “Imagining” and “internal simulation”, hence generative mechanisms which rely on prior knowledge are employed to predict the immediate future and are key in increasing bandwidth and speed of cognitive development. Current artificial cognitive systems are limited in this respect as they do not yet make efficient use of such generative mechanisms for the extension of their cognitive properties.

Solution

The Xperience project will address this problem by structural bootstrapping, an idea taken from child language acquisition research. Structural bootstrapping is a method of building generative models, leveraging existing experience to predict unexplored action effects and to focus the hypothesis space for learning novel concepts. This developmental approach enables rapid generalization and acquisition of new knowledge and skills from little additional training data. Moreover, thanks to shared concepts, structural bootstrapping enables enactive agents to communicate effectively with each other and with humans. Structural bootstrapping can be employed at all levels of cognitive development (e.g. sensorimotor, planning, communication).

Project Goals

- Xperience will demonstrate that state‐of‐the‐art enactive systems can be significantly extended by using structural bootstrapping to generate new knowledge. This process is founded on explorative knowledge acquisition, and subsequently validated through experience‐based generalization.

- Xperience will implement, adapt, and extend a complete robot system for automating introspective, predictive, and interactive understanding of actions and dynamic situations. Xperience will evaluate, and benchmark this approach on existing state‐of‐the‐art humanoid robots, integrating the different components into a complete system that can interact with humans.

Expected Impact

By equipping embodied artificial agents with the means to exploit prior experience via generative inner models, the methods to be developed here are expected to impact a wide range of autonomous robotics applications that benefit from efficient learning through exploration, predictive reasoning and external guidance.

HEiKA-EXO: Optimization-based development and control of an exoskeleton for medical applications

- Contact:

- Funding:

HEiKA

- Startdate:

Januar 2013

- Enddate:

Dezember 2013

Description

About GRASP

GRASP is an Integrated Project funded by the European Commission through its Cognition Unit under the Information Society Technologies of the seventh Framework Programme (FP7). The project was launched on 1st of March 2008 and will run for a total of 48 months.

The aim of GRASP is the design of a cognitive system capable of performing grasping and manipulation tasks in open-ended environments, dealing with novelty, uncertainty and unforeseen situations. To meet the aim of the project, studying the problem of object manipulation and grasping will provide a theoretical and measurable basis for system design that is valid in both human and artificial systems. This is of utmost importance for the design of artificial cognitive systems that are to be deployed in real environments and interact with humans and other agents. Such systems need the ability to exploit the innate knowledge and self-understanding to gradually develop cognitive capabilities. To demonstrate the feasibility of our approach, we will instantiate, implement and evaluate our theories and hypotheses on robot systems with different embodiments and complexity.

GRASP goes beyond the classical perceive-act or act-perceive approach and implements a predict-act-perceive paradigm that originates from findings of human brain research and results of mental training in humans where the self-knowledge is retrieved through different emulation principles. The knowledge of grasping in humans can be used to provide the initial model of the grasping process that then has to be grounded through introspection to the specific embodiment. To achieve open-ended cognitive behaviour, we use surprise to steer the generation of grasping knowledge and modelling

PACO-PLUS: Perception, Action and Cognition through Learning of Object-Action Complexes

- Contact:

- Funding:

EU-FP6

- Startdate:

2006

- Enddate:

2010

About PACO-PLUS

PACO-PLUS is an integrated Project funded by the European Commission through its Cognition Unit under the Information Society Technologies of the sixth Framework Programme (FP6). The project was launched on 1st of February 2006 and will run for a total of 48 months.

PACO-PLUS brings together an interdisciplinary research team to design and build cognitive robots capable of developing perceptual, behavioural and cognitive categories that can be used, communicated and shared with other humans and artificial agents. To demonstrate our approach we are building robot systems that will display increasingly advanced cognitive capabilities over the course of the programme. They will learn to operate in the real world and to interact and communicate with humans. To do this they must model and reflectively reason about their perceptions and actions in order to learn, act and react appropriately. We hypothesize that such understanding can only be attained by embodied agents and requires the simultaneous consideration of perception and action.- Objects and Actions are inseparably intertwined in cognitive processing; that is “Object-Action Complexes” (OACs) are the building blocks of cognition.

- Cognition is based on reflective learning, contextualizing and then reinterpreting OACs to learn more abstract OACs, through a grounded sensing and action cycle.

- The core measure of effectiveness for all learned cognitive structures is: Do they increase situation reproducibility and/or reduce situational uncertainty in ways that allow the agent to achieve its goals?

Collaborative Research Center (SFB) 588: Humanoid Robots - Learning and Cooperating Multimodal Robots

- Contact:

- Funding:

- Startdate:

01.07.2001

- Enddate:

30.06.2012

Description

The Collaborative Research Center 588 "Humanoid Robots - Learning and Cooperating Multimodal Robots" was established on the 1st of July 2001 by the Deutsche Forschungs-gemeinschaft (DFG) and will run until June 30th, 2012.

The goal of this project is to generate concepts, methods and concrete mechatronical components for a humanoid robot, which will be able to share his activity space with a human partner. With the aid of this partially anthromorphic robot system, it will be possible to step out of the "robot cage" to realise a direct contact to humans.

The term multimodality includes the communication modalities intuitive for the user such as speech, gesture and haptics (physical contact between the human and the robot), which will be used to command or instruct the robot system directly.

Concerning the cooperation between the user and the robot - for example in the joint manipulation of objects - it is important for the robot to recognise the human's intention, to remember the acts that have already been carried out together and to apply this knowledge correctly in the individual case. Great effort is spent on safety, as this is a very important aspect of the man-machine-cooperation.

An outstanding property of the system is its ability to learn. The reason for this is the possibility to lead the system to new, formerly unknown problems, for example to new terms and new objects. Even new motions will be learned with the aid of the human and they can be corrected in an interactive way by the user.

The Collaborative Research Center 588 is assigned to the Department of Informatics. More than 40 scientists and 13 institutes are involved in this project. They belong to the department of Informatics, the Faculty of Electrical and Information Engineering, the Faculty of Mechanical Engineering and Faculty of Humanities and Social Sciences as well as Fraunhofer Institute of Optronics, System Technologies and Image Exploitation (IOSB) and the Forschungszentrum Informatik Karlsruhe (FZI).

EU FET Flagship Initiative "Robot Companions for Citizens"

- Contact:

- Funding:

EU-FET

- Startdate:

2011

- Enddate:

2012

Description

The coordination action CA-RoboCom will design and describe the FET Flagship initiative “Robot Companions for Citizens” (RCC) including its: Scientific and Technological framework, governance, financial and legal structure, funding scheme, competitiveness strategy and risk analysis.

The FET Flagship initiative RCC will realize a unique and unforeseen multidisciplinary science and engineering program supporting a radically new approach towards machines and how we deploy them in our society.

Robot Companions for Citizens is an ecology of sentient machines that will help and assist humans in the broadest possible sense to support and sustain our welfare. RCC will have soft bodies based on the novel integration of solid articulated structures with flexible properties and display soft behavior based on new levels of perceptual, cognitive and emotive capabilities. RCC will be cognizant and aware of their physical and social world and respond accordingly. RCC will attain these properties because of their grounding in the most advanced sentient machines we know: animals.

Robot Companions for Citizens will validate our understanding of the general design principles underlying biological bodies and brains, establishing a positive feedback between science and engineering.

Driven by the vision and ambition of RCC, CA-RoboCom will, by means of an appropriate outreach strategy, involve all pertinent stakeholders: science and technology, society, finance, politics and industry. Other than the commitment of its Consortium, CA-RoboCom will involve a wide range of external experts in its working groups, its Advisory Board, and in its European and International Cooperation board. The CA-RoboCom consortium believes that given the potential transformative and disruptive effects of RCC in our society their development and deployment has to be based on a the broadest possible support platform.